When starting to build a HomeLab, the first applications to run are normally ad blockers and Docker Registries. If the goal isn't some type of extremely polished IaC pipeline, the result will be trying to figure out what is the quickest solution to solve your problem. Because it's your HomeLab and you are the only user, you obviously end up with using basic authentication. And this is fine! You don't need anything more complicated when it's just you using the software!

But in every HomeLabers life, your needs grow beyond just what you need. You gain friends, co-workers, lovers and believe it or not, they want to host applications to. And guess who is already paying to host a private cloud at their house. Nows your chance to cos-play as a cloud provider!

So, this is a journey (technically my journey) for making a private cloud enabled to support multiple tenants. The idea of moving off basic authentication and developing support for dockers horrible oauth token authentication implementation.

And this is where I guess you are stuck! Pardon my french but how the F**K does anyone support this oauth token standard? In this blog post we'll cover a 500 meter view of moving off of basic authentication and moving onto supporting dockers token authentication.

Prerequisites

Before starting, there is an assumption I have.

I expect you are already running or using some type of Identity Management (IDM) software like KeyClock, Authelia or, my personal favourite, Kanidm. The Identity Management software should allow you to manage users who will access your application, or in this case your docker registry. You should be able to create users, groups, applications with OAuth2 support and be able to add custom claims onto the application.

I also assume that your docker registry is publicly available, along with your Identity Management software of choice. I guess if it's accessible over a VPN thats fine, but you should be striving to have everything available over the public internet so you can support the maximum amount of clients without requiring them to go through a complicated onboarding process.

I also expect that you are running the open source Docker Registry and you have Basic Authentication configured.

Enterprise Solutions for Docker Registries

Now that you've reached a point where you want to support multiple clients, the search will start for looking for a solution that works with your Identity Management software. Quick google searches will lead you to Reddit threads where many recommendations are made. Some of the ones I found were:

- Harbor

- Gitea

- GitLab

- Quay

- Artifactory (I didn't expect this to have a free solution so i'm not linking it)

I think there is more, however, I didn't look into them. And to be honest, I only took the time to look into Harbor. It has a bunch of great integrations, the main one being image scanning and out of the box support for OAuth2 integration.

Quay seemed like another solution but I couldn't find their open source offering. Making me think that they are no longer supporting open source implementation of Docker Registries. However this user seems to be using it with some success.

Also covers other solutions i don't touch on

The other solutions are of course private Github like Git hosting applications. This is out of scope for this blog post and the goal I had so I skipped over deploying them and testing them out.

Issues with Harbor

When evaluating Harbor for deployment, I thought it would be easy and a small application to deploy. Like how hard is it to host a docker image? While reviewing their docs, they presented a Helm chart that made installing it on Kubernetes easy (or so I thought!). However, when removing the curtain, you'll start to see the shit show which is Harbor.

Although you think you are deploying a simple solution, the reality is a Helm chart that attempts to deploy 9+ images, all of which have been custom built as aa Harbor type of image. This makes it hard to understand what the actual source of the image actually is, and know what you are about to deploy on your cluster. The chart also doesn't present a nice abstraction and overall is hard to implement.

I am starting to think that when a Helm chart has a values.yaml file that is 1000+ lines long, it might be a sign your chart is to complicated...and maybe so is your app.With deploying so many images, means that it's scalable. But honestly for a HomeLab, with a handful of users, it's too complicated. I don't want to need to manage such a large distributed system. If all I had to run was Harbor, it seems like it would be a good solution, but i'm managing my whole private cloud, I need a simpler solution.

To put the knife in the coffin, Harbor suffers from the same struggles that Traefik suffers from, mainly being horrible documentation (as of writing this in 2024). Many configurations options aren't clearly documented on their web page, and their margins are setup in such a way that word wrapping makes every option hard to read. I also just don't love not being able to use environment variables, but thats more of a personal issue.

This overall, turned me off of Harbor, and I sadly, decided to delete my own implementation of the 1000+ line helm configuration file. There goes a day I guess. Time to find a new solution.

Open Source to the rescue

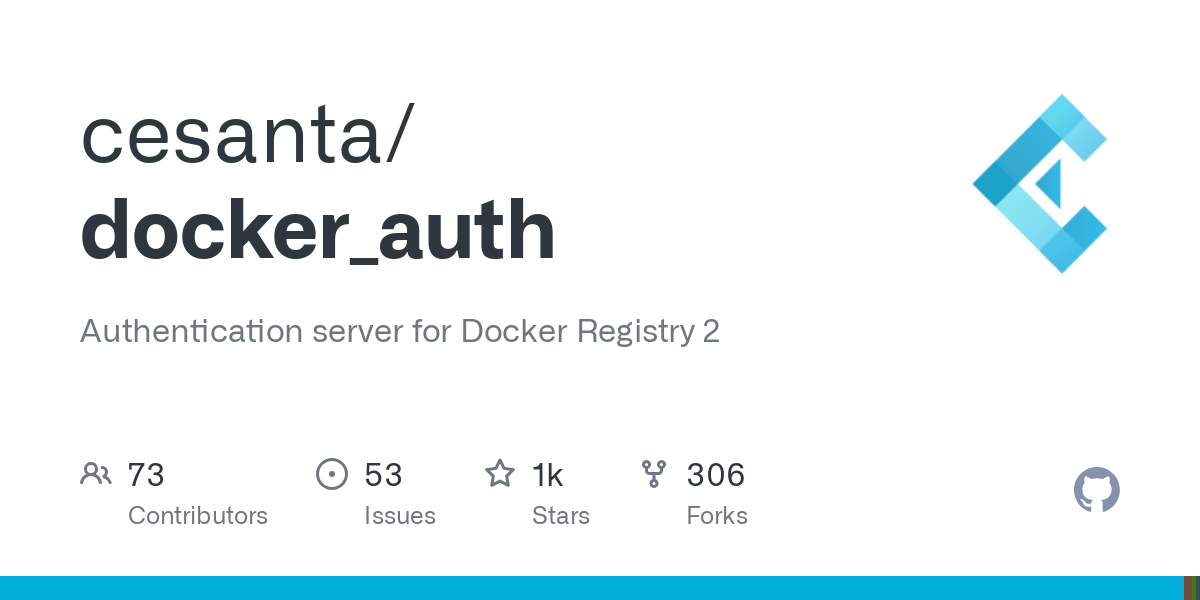

Splunking on reddit has it's advantages, one being that I find it's a bit easier to discover solutions used in the real world rather then searching Github. One such post presented the idea of using a custom authentication application called docker_auth.

Post about self hosting authentication authentication for docker registry

docker_auth is a Golang application that works as a authentication and authorization server for creating tokens that can be used against Docker Registry. It's seems like a robust solution, allowing for integration with many authentication clients and the ability to do complex authorization based on the OAuth2 token returned from your IDM.

It took a bit of work to wrangle the project into a usable state. Issues like docker images not being up to date (I ended up using the edge image) and documentation not being updated with the correct standards slowed me down from quickly adopting the project. The last commit was 4 months ago, so it seems like the project is a little dead. Not that it's an issue, if a project is completed, there is no reason to add more features. Overall, I think I can recommend docker_auth as a token provider for authentication with a private docker registry. However, there are some fixes I can recommend for it which i'll cover later.

Token auth for Docker Registry's

The real reason why you are here

Docker token authentication is weird. Unlike other other applications, it won't handle getting the JWT token on your behalf. No. Using docker it expects YOU to go get the token. If you are bored and want to read the implementation of the token service, theres some public documentation here. However, in my corner of the internet, we'll try and run the open source solution before reading and implement a spec.

Setting up docker_auth

I'll assume you know how to create some TLS certificates using cert-manager and create a deployment for docker_auth. So i'll just cover documenting the configuration file required by docker_auth.

Assuming your using OIDC as your login method, this is the way to configure docker_auth to use your IDM service. Theres a lot going on here, so let's break it down.

server:

addr: ":443"

certificate: "/certs/tls.crt"

key: "/certs/tls.key"

token:

issuer: "Homelab"

expiration: 900

oidc_auth:

issuer: "https://idm.example.com/oauth2/<client_id>"

redirect_url: "https://registry.example.com/oidc_auth"

client_id: "<client_id>"

client_secret_file: "/oidc/secret.txt"

level_token_db:

path: "/tokens/tokens.ldb"

token_hash_cost: 5

registry_url: "https://registry.example.com"

user_claim: email

label_claims:

- "groups"

scopes:

- "openid"

- "email"

users:

"admin":

password: example1234

acl:

- match: { labels: { "groups": "all" } }

actions: ["push", "pull"]

comment: "Admin users and push and pull all images"

- match: { account: "admin" }

actions: ["*"]

comment: "Basic authentication administrator user"

Server

Server block contains variables that will help the server run. Here we are telling the server to run on port 443 and to use our generated TLS certifications. It's recommended to run your docker registry and it's authentication component using TLS end to end, even behind a reverse proxy.

Token

The Token block contains information on who is issuing the token. This is important as we'll need this information when we run the Docker Registry. We can also specify an expiration time here, which we set to 900 minutes, or 15 hours.

oidc_auth

We then need to configure a way for a user to login. Here is where you would put your OAuth2 configuration information that you get from creating a client in your IDM. We tell the program where to store the IDM tokens (in this case in a levelDB database) as well as the claims we'll want be able to match on during our acl steps.

users

This is the part i love the most. As a pragmatic HomeLaber, I started with Basic authentication to my docker registry which has served me well. I'm able to migrate to this solution while keeping my existing basic user.

acl

In this section, we can provide complex rules. We can use labels keyword to match on the claims we set during our authentication process. We can also match on account names. You can read more by referencing the Git Repository.

Reference implementation of many different types of access

Setting up Docker Registry

With the docker_auth configured, we need to complete configuring our registry to respect the fact that this service will be issuing tokens so that users can access their images. Again, the assumption is you are already using Basic Authentication for your current deployment.

env:

- name: REGISTRY_AUTH

value: token

- name: REGISTRY_AUTH_TOKEN_REALM

value: https://registry.example.com/auth

- name: REGISTRY_AUTH_TOKEN_ISSUER

value: "Homelab"

- name: REGISTRY_AUTH_TOKEN_SERVICE

value: "Docker registry"

- name: REGISTRY_AUTH_TOKEN_ROOTCERTBUNDLE

value: /token/cert/tls.crt

- name: REGISTRY_AUTH_TOKEN_JWKS

value: https://idm.example.com/oauth2/openid/<client_id>/public_key.jwk

# ... more valuesGet these changes published and let's test it!

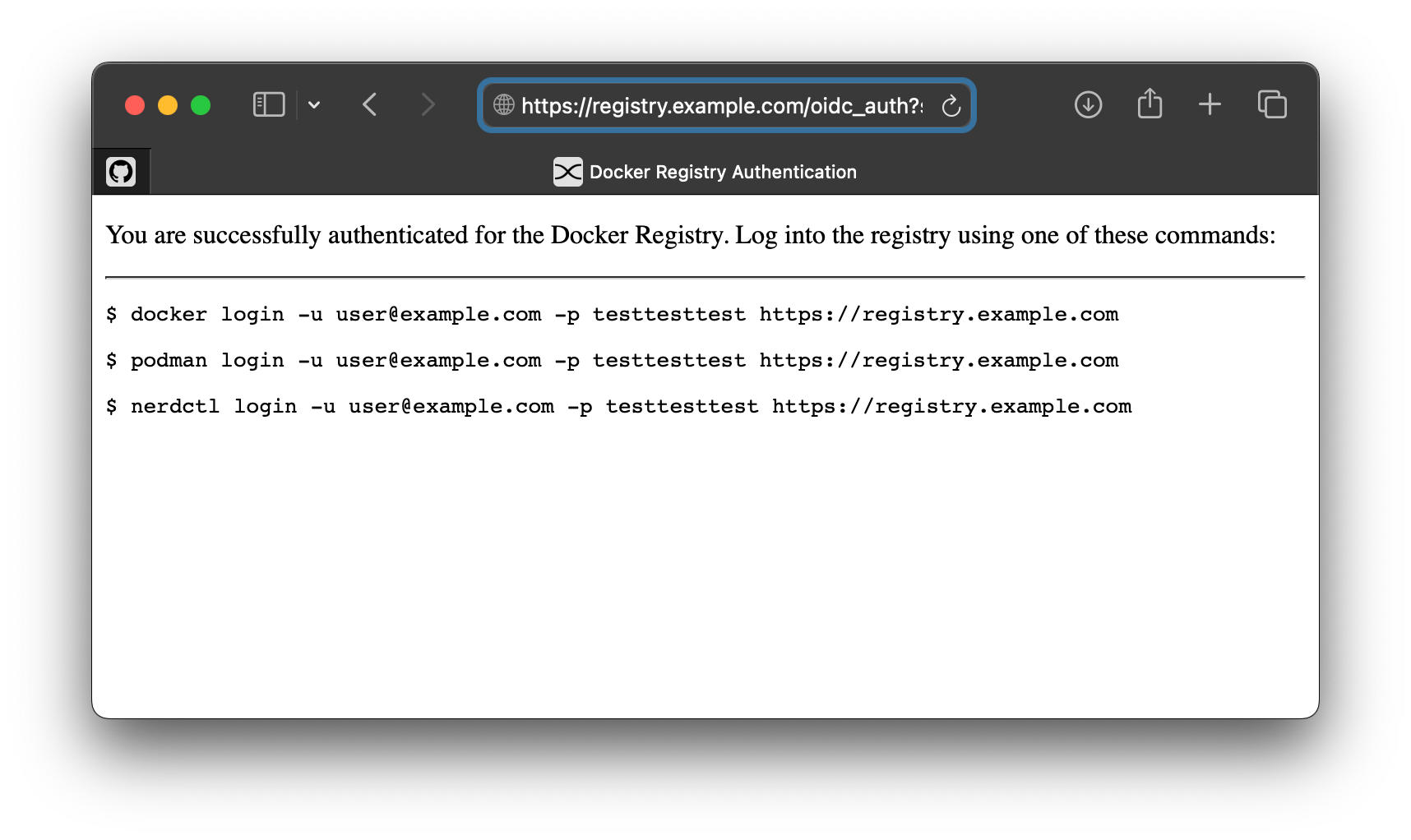

Let's get our token

docker_auth allows the user to login by visiting a URL to help initiate the flow at the path /oidc_auth. Not the sexiest of screens, but no worries, this should work. Let's client the login link.

Annnnd theres an error. Ok this is a side rant before we continue on with our regular scheduled programming. You see, Kanidm cares about security. It tries really hard to default to the latest and greatest recommended authentication methods. Thats why I run it as my identity management server.

However, because of this, many applications end up not working or are not using the most secure settings they can. In this particular case, it was failing because the URL does not include the state parameter. The URL in the bar looks like the following

https://idm.example.com/ui/oauth2

?response_type=code

&scope=openid%20email

&client_id=<client_id>

&redirect_uri=https%3a%2f%2fregistry.example.com%2foidc_authBy appending &state=example into the URL, it solves the issue and we are able to generate credentials we can now use to login to our private registry.

This is something i'm looking into fixing and just running my own version of the project. Hopefully i'll be able to remove this section at some point.

Copy and paste the command into your terminal, and now were cooking with gas baby! Oh man, this has gotten me so excited, let's setup our registry UI to now to use this!

Docker Registry UI Authentication

Venturing into the odd

So, the current login process is kinda weird, but, you know what, it works! I want to be able to use these docker credentials now to also login to my registry ui. I run Joxit/docker-registry-ui on my HomeLab.

Honestly, i don't love it, but it does the job. I'm not going to be the one to complain about a free and open source projects that helps me achieve my goals. Joxit has spent some time working on this feature and has created a...confusing example of token base authentication working with nginx and keyclock. You can read about it in his example section.

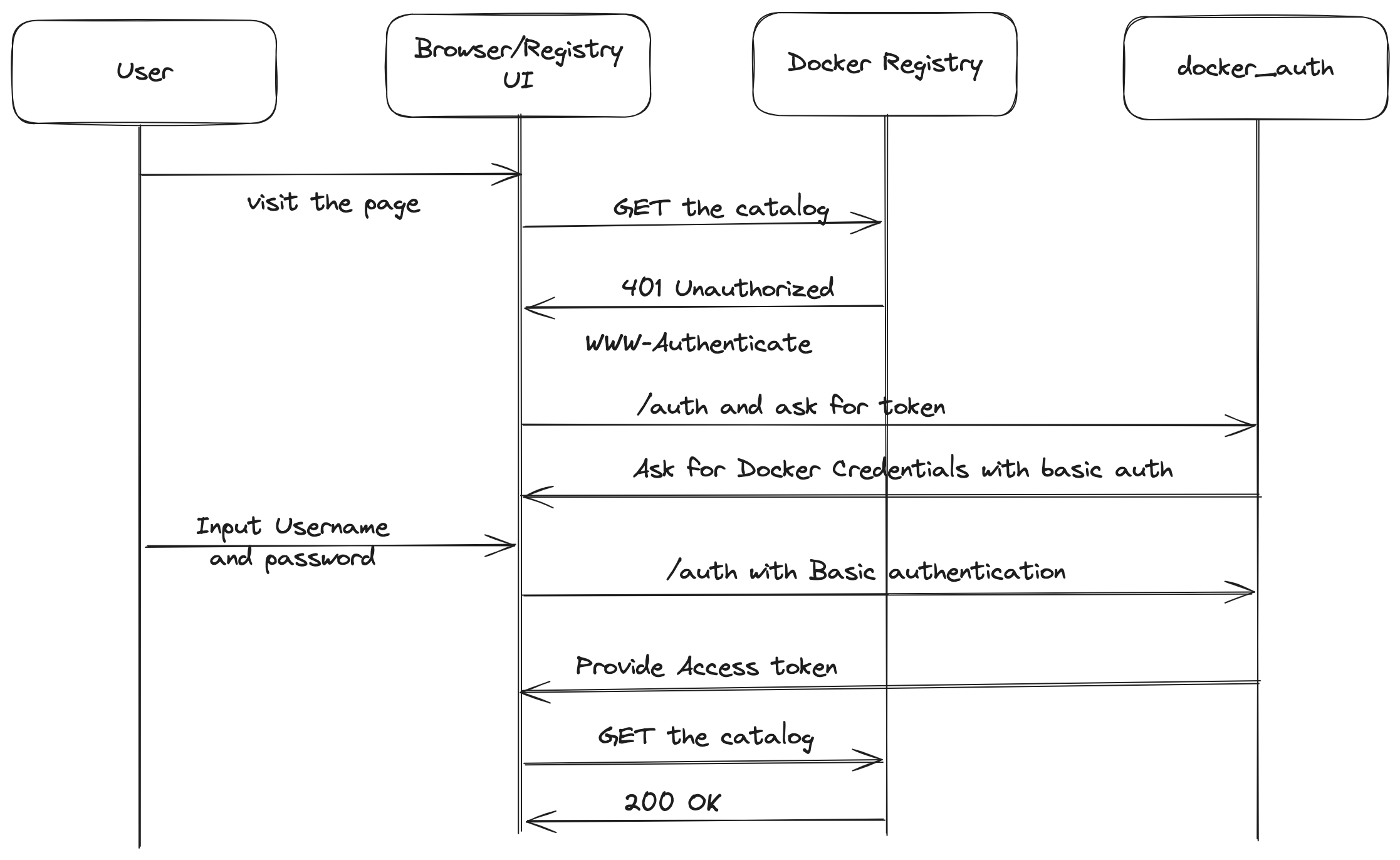

However, I have now spent time on diagramming it out, and it's easier then it sounds. Let me share a diagram with you.

The diagram above shows us attempting to get the catalog of our private registry. We are relying on response WWW-Authenticate headers to prompt us for our user name and password for our docker user. Once we give those, the docker_auth service is able to provide us with our access token which is required for us to use the registry API.

Minor issues

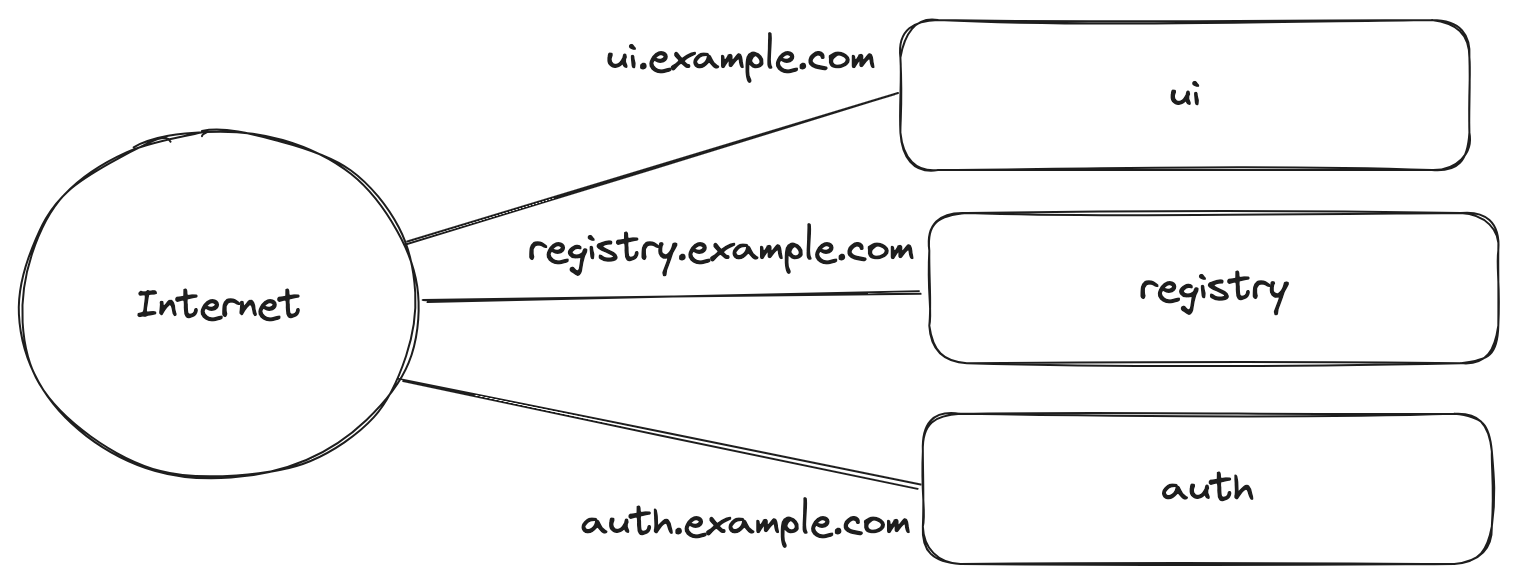

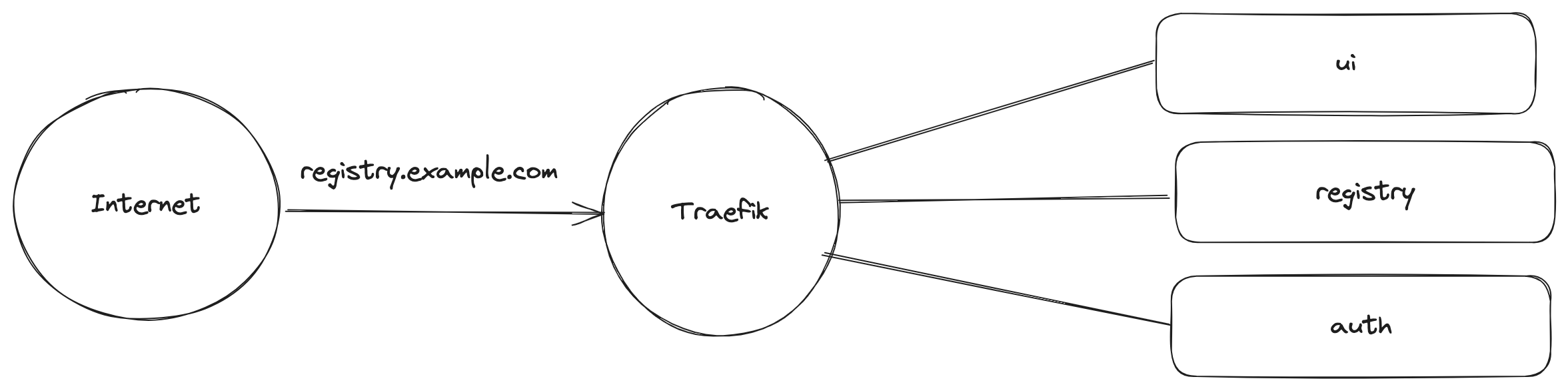

I want to believe most users would be would be running most application behind a unique URL. Something like the diagram below.

However, this doesn't work when trying to authenticate with different host using basic credentials and the WWW-Authenticate response header. The browser doesn't know that this is totally 100%, trust me bro, safe. So it blocks you.

For example, let's say we are on ui.example.com and then, while on that page, we reach out to auth.example.com which responds back with an ask for basic credentials. The browser sees that this is from another host that is not ui.example.com so it doesn't prompt for the credentials. Instead we need to put everything behind a reverse proxy.

In this example, everything is hidden behind Traefik, it will handle sending the traffic to the correct applications. I'm not sure if this is a good practice or not, but you know what they say, if it ain't broke, don't fix it...sure i guess.

An example of what my Traefik Ingress Route looks like the following:

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: registry-ingress

spec:

routes:

# Handle the particular requests for authentication

- match: "Host(`registry.example.com`) && (PathPrefix(`/auth`) || PathPrefix(`/oidc_auth`))"

kind: Rule

services:

- name: auth

# If we are requesting something from the registry, it should start with a v2

- match: "Host(`registry.example.com`) && PathPrefix('/v2')"

kind: Rule

services:

- name: registry

# Catch all. If nothing else matches, just use registry url

- match: "Host(`registry.example.com`)"

kind: Rule

services:

- name: uiConclusion

I began this process thinking that I would need to run Harbor if I wanted to provide token based authentication to my docker registry. Realizing that it would be 9+ containers just to run it really turned me off.

I'm happy I was able to find docker_auth project. I'm also happy that running it is relatively easy. However, there was one more small issue with getting this setup working. Again this has to do with the integration between docker_auth Kanidm.

Kanidm requires that a user get's their JWT claims by calling an introspection endpoint. However, the OAuth2 library that docker_auth uses for getting the token doesn't do this request. Worse then that, theres an issue open for it, that doesn't seem to be getting any help 🫡

Because of that, i can't actually use the labels in the acl section of the docker_auth config...Oh well...i'll fix this all later.

In actual conclusion

I have token based authentication to my private registry and it was a wild ride to get it working. Contact me for more details and implementation files if you want to get this running yourself.